What if the person you’re talking to on a video call isn’t real?

Or the voice on the other end of the line sounds just like your loved one, but it’s not?

While AI is definitely helping us work faster, it’s also getting better at fooling us. From creating lifelike deepfakes to generating convincing fake voices, the technology is blurring the lines between reality and illusion. In fact, the generative AI tools are now sophisticated enough to deceive even the most tech-savvy individuals.

While AI has brought undeniable benefits, its deceptive capabilities have also opened the door for malicious actors. Whether used for scams, misinformation, or impersonations, AI’s power to mislead is quickly becoming a serious challenge we can’t ignore.

We’ve dove deep into the internet and put together a compilation of the most famous, scandalous, or simply funny stories of how humanity was deceived by smart AI use. And then, we went on to ask internet users what they think of all this craze and how they protect themselves.

Let’s check out the results of our research.

AI deepfake statistics

Deepfake technology has been exploited for fraudulent activities for a while now, resulting in nearly $900 million in financial losses so far. In just the first six months of 2025, damages reached $410 million—surpassing the $359 million recorded for the entire year of 2024 and far exceeding the $128 million accumulated between 2019 and 2023.

To better understand how AI-generated content is impacting everyday people, we surveyed nearly 1,000 internet users. Here’s what we found:

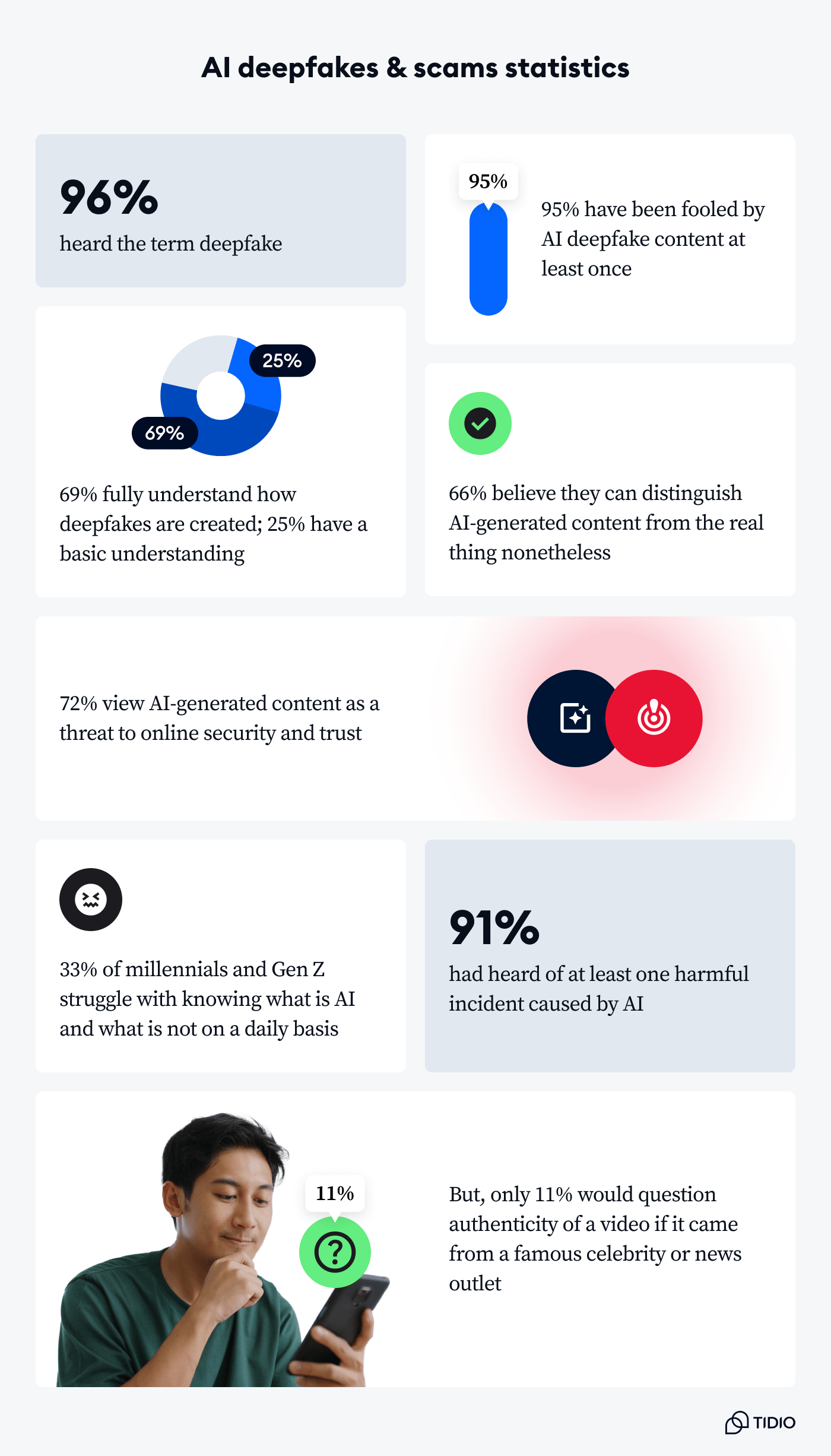

- More than 96% of people have heard of the term “deepfake”: awareness is widespread, but understanding remains varied

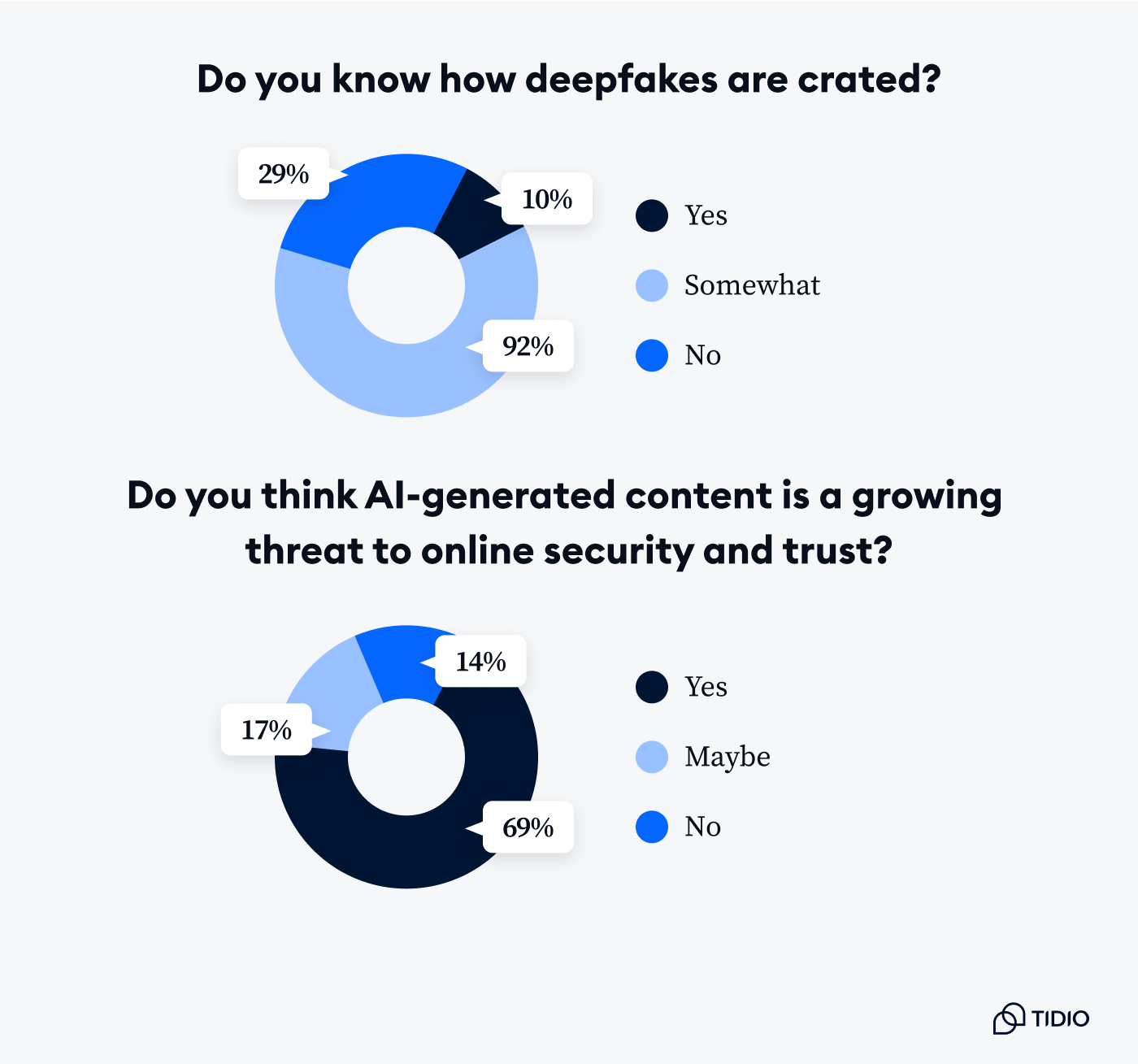

- About 69% of respondents fully understand how deepfakes are created, while another 25% claim a basic understanding

- Around 78% of people reported seeing AI-generated deepfake content online, whether they realized it immediately or not

- Almost a third of millennials and Gen Z (27%) struggle with knowing what is AI and what is not on a daily basis

- A whopping 95% admitted to being fooled by AI content at least once, believing it was real before finding out it wasn’t

- More than 71% have personally been misled or scammed by AI-generated content, whether through fake endorsements, phishing messages, or voice cloning

- Around 66% of people believe they can easily distinguish AI-generated content from the real thing, though real-world incidents suggest this confidence may be misplaced

- As many as 72% view AI-generated content as a serious and growing threat to online security and trust

- Around 91% had heard of at least one harmful incident caused by AI, such as scams or misinformation campaigns

- About 88% said they would trust a deepfake video if it came from a reputable news outlet or a celebrity they trust, and only 11% would question its authenticity

These numbers highlight the urgency of media literacy and critical thinking in the age of synthetic media.

How to protect yourself and your business from AI deception

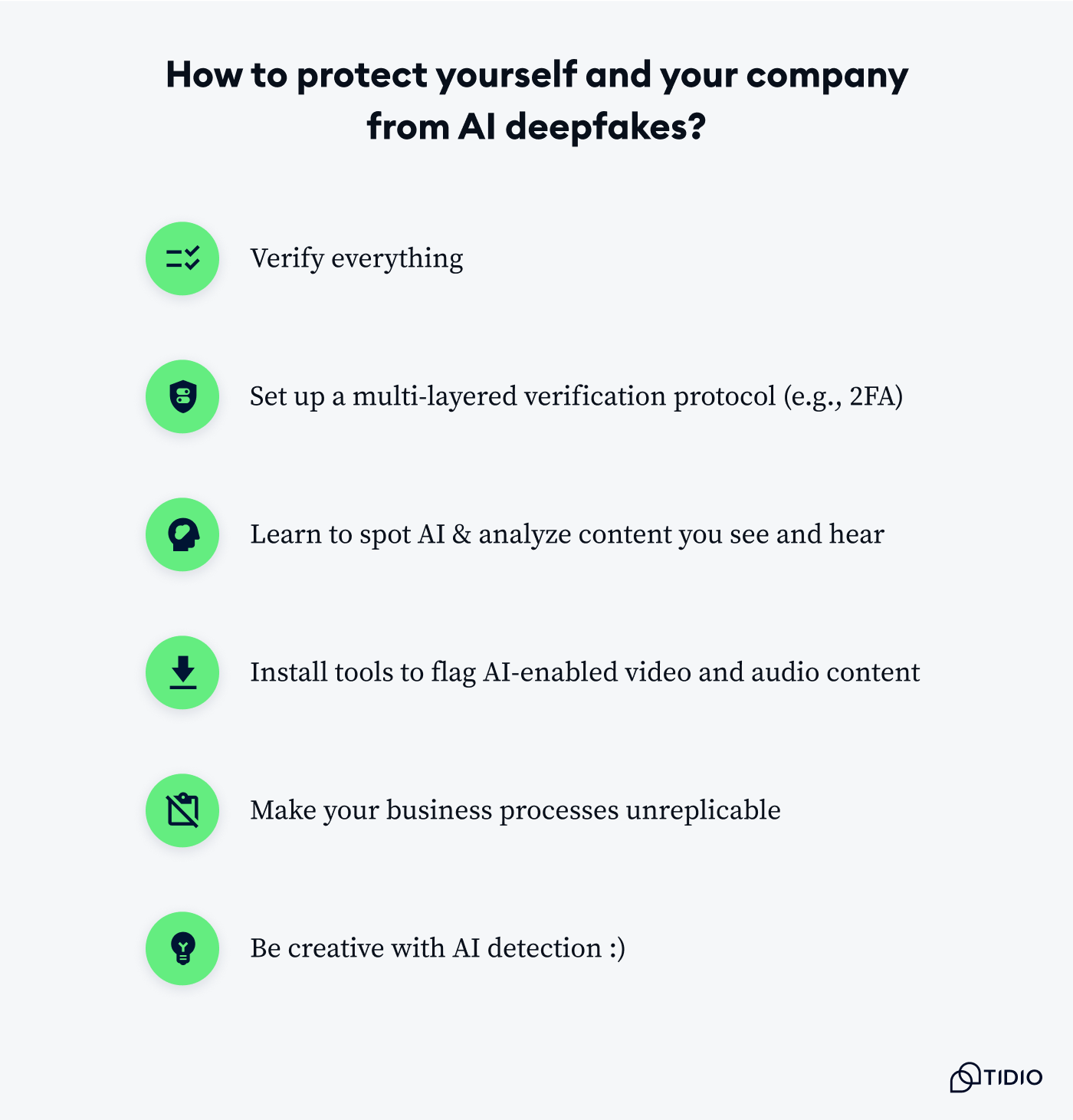

Let’s go over the most promising tips to protect you, your business, and your sanity when it comes to hyper-realistic deepfakes and scams.

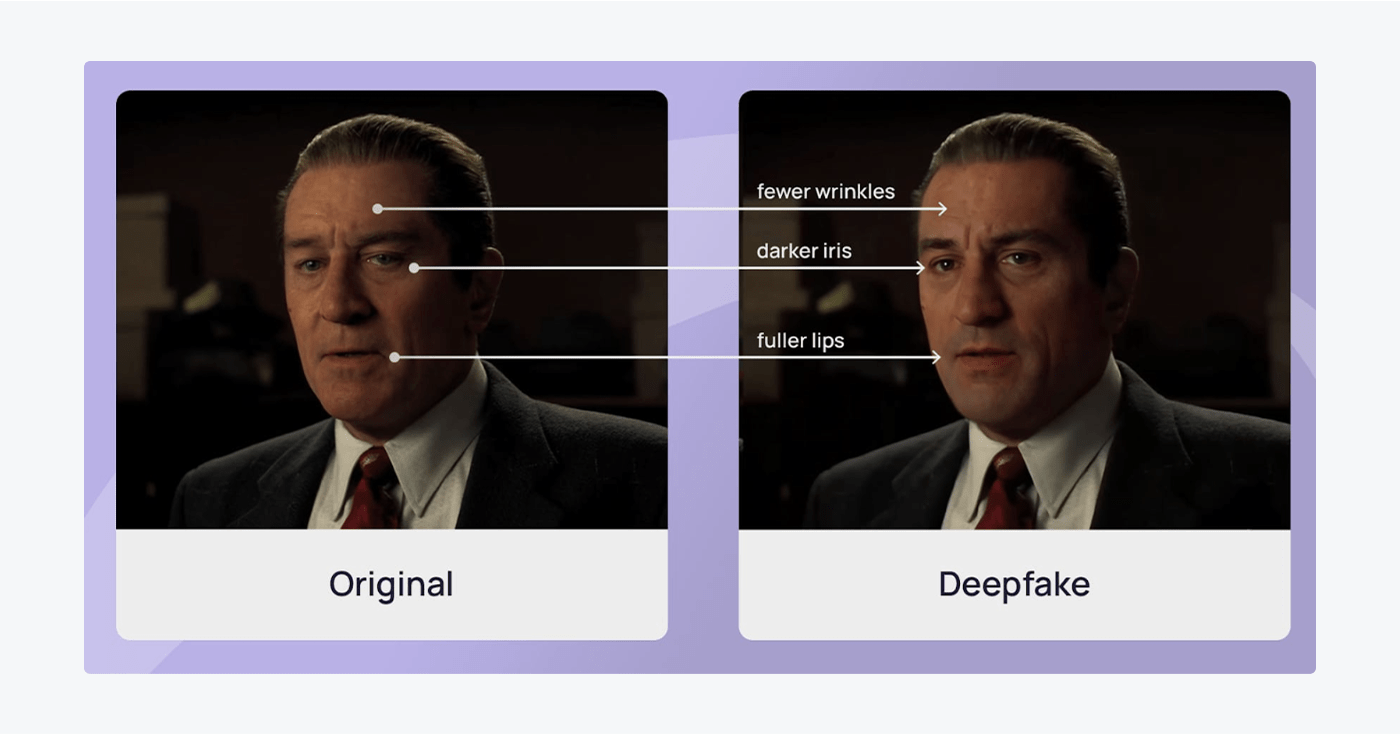

Spot the signs

AI-generated content can be surprisingly convincing, but most fakes still leave behind subtle clues. Pay attention to small inconsistencies, especially in videos. Deepfakes often feature unnatural eye movement, irregular blinking, awkward hand gestures, or distorted teeth. Voice-cloned audio might have flat emotional tones or slight mismatches in timing.

Also, don’t automatically trust content shared by verified social media accounts. With account hacks and impersonation rampant, even a blue checkmark doesn’t guarantee authenticity.

Tools and awareness

Fighting AI deception starts with awareness. Use detection tools like Hive, Sensity, or Deepware Scanner to analyze suspicious videos or voices. Another effective trick is reverse image searching. If you come across a photo that seems too polished or bizarre, run it through Google Images or tools like TinEye to see if it’s been flagged or appears in AI-generation databases. Some browser extensions can also flag synthetic content in real time. These tools are constantly evolving to keep up with AI advancements.

Education is equally crucial. Teach your family, especially seniors, how deepfakes work and what common scams look like. AI impersonators often target the elderly with emotional appeals or financial frauds. Even showing them examples of past AI hoaxes can boost their defenses.

Trust but verify

Scammers and misinformation campaigns rely on emotional responses. If a video or voice clip sparks panic, outrage, or urgent decision-making—pause. Take a moment to verify the source. Can you find the same clip on multiple reliable outlets? Is the person in question also issuing a public statement? Are others reporting it as fake?

Expert tips and experiences

Let’s now take a look at what experts share when it comes to protecting yourself and your business from AI scams.

- Adopt a new mindset:

The best defence against AI scams and deepfakes isn't new technology; it's a new mindset. We have to treat every urgent, out-of-the-blue request with a healthy dose of skepticism. The golden rule is to ‘verify before you act.’ If a call or message asks for sensitive information or a quick transfer of funds, hang up and use a trusted, pre-saved contact number to verify the request. This simple action disrupts the scammer's timeline and gives you the control back.

- Use a multi-layered verification protocol:

If I get a strange email or call from a "colleague," I always check through a different, already set up channel, like our encrypted messaging system or a direct call to their verified number.

- Verify everything:

Use a safe word or security question to confirm identities, especially for family emergency scams, which are now more convincing with voice cloning easily available. Also, be cautious of celebrity endorsements on social media—many are fake. Verify any legitimate offer directly on the celebrity’s official website.

- Use creative screening to detect AI-enabled imposters:

As AI-driven deepfake scams grow more sophisticated, it's very important to find smart and effective ways to detect them. One rising threat involves North Korean operatives impersonating Western IT professionals by using AI to craft convincing CVs, cover letters, and even deepfake appearances during interviews. To protect your time, resources, and company data, consider asking creative questions that only someone with the claimed background could answer. These might touch on pop culture, current events, or even involve a simple request to speak a sentence in their supposed native language. Such creative tactics can help expose impostors immediately, even when traditional vetting methods fall short.

- Make it harder to replicate any component of your business:

It's true that deep fakes are getting better at copying voices and faces, but they still have a difficult time with context. So we make our internal processes very hard to mimic. For example, we have naming conventions, unique workflows, and cloud infrastructure standards that people from the outside wouldn’t know. That alone makes it harder for AI-generated attacks to blend in.

One thing we’ve done is create a “known communication style” guideline for our team and major partners. That means everyone knows what kind of language we use in emails, what our receipts look like, and how we speak in videos. That way, if something weird pops up, even if it looks like us, people can tell it’s off.

Now, let’s take a look at deepfake stories that have shaken the world. Knowledge of those cases can help protect yourself and your business from similar threats.

Famous deepfakes and scams: compilation

There’s been thousands of stories about people fooled by deepfakes and AI scams. Some are funny, some are very anxiety-inducing. And many have gone viral online. Let’s take a look at the most notable examples.

AI in the workplace and recruitment

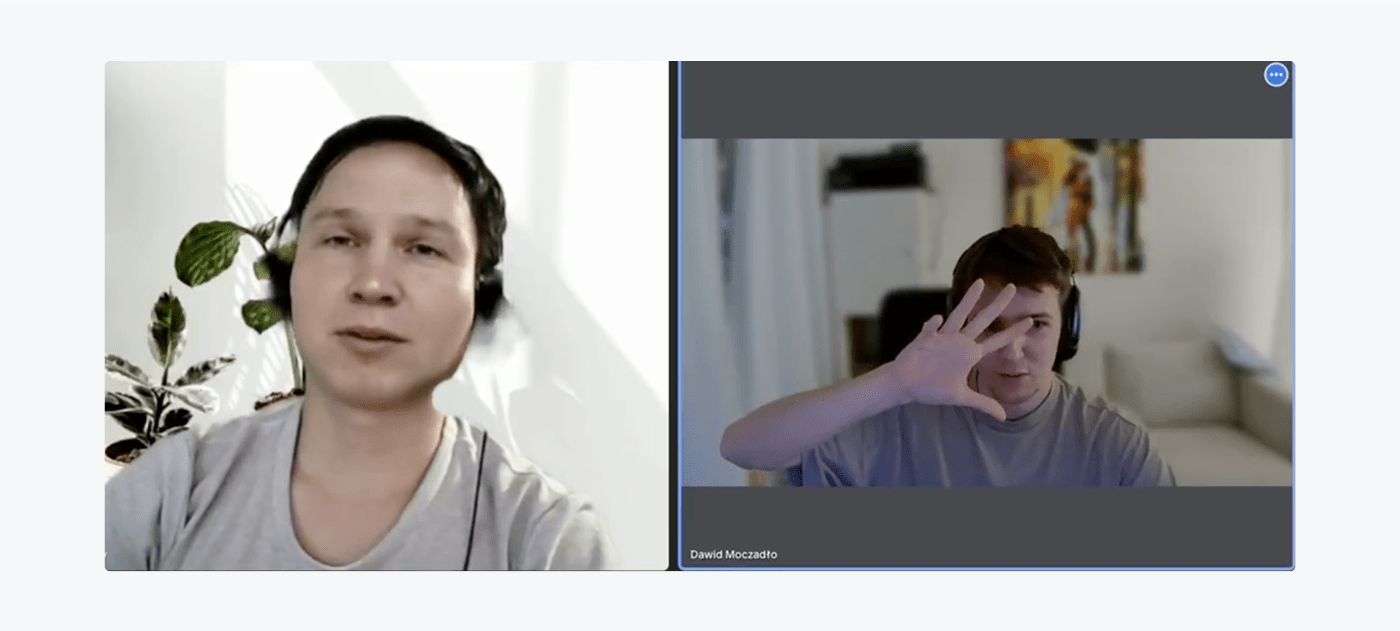

- Polish politician deepfake (2025): A cybersecurity firm discovered a job candidate using a deepfake image modeled after Polish politician Sławomir Mentzen. The scammer had generated a flawless resume and convincing AI-generated video presence. Sure, the accent sounded weird, but nothing out of the ordinary.

However, when asked to put his hand in front of his face on a video call, the applicant was unable to do so, revealing the lie. As the story spread, it turned out that more than one company has encountered this specific deepfake.

- North Korean deepfake employees: Building on the example above, in 2024, cybersecurity firm KnowBe4 confirmed it had been targeted by such a scheme when the company was duped into hiring a fake IT worker from North Korea.

A January 2025 Google report confirms that North Korean state-sponsored hackers are increasingly using generative AI for cyber operations, including reconnaissance, infrastructure mapping, and malware development. They use large language models to pose as IT professionals, generating CVs, cover letters, and researching roles on sites like LinkedIn to secure jobs in Western firms and funnel income back to the regime.

A CrowdStrike VP noted that even a simple, culturally sensitive question—like “How fat is Kim Jong Un?”—can unmask impostors, who often disconnect to avoid any perceived disrespect that could lead to punishment. While unconventional, such tactics show the value of creative screening methods in identifying AI-backed deception.

Some applicants from Asia try to pass themselves off as Eastern European developers. They create fake CVs, often listing degrees from Polish or Ukrainian universities, and build LinkedIn profiles that look legitimate at first glance. But when we begin the interview with a simple question in Polish or Ukrainian, things fall apart quickly. It’s not the kind of deepfake you’d see in the news with celebrities, but it’s definitely a form of AI-enabled deception.

- $25M scam in Hong Kong (2024): A finance employee joined a Zoom meeting with what looked like her executive team. Every participant was a deepfake. She transferred $25 million believing it was a real internal company discussion.

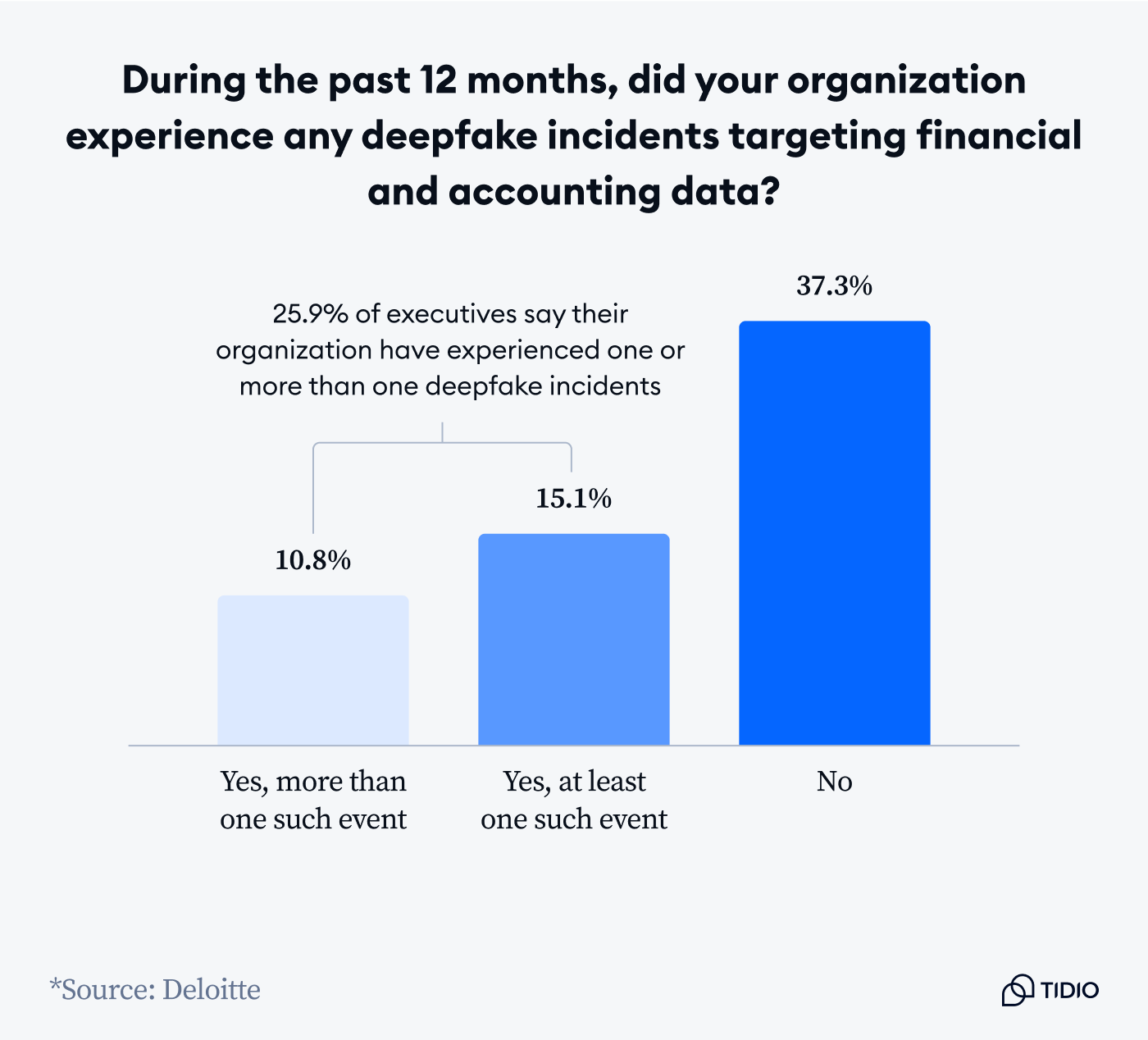

Deepfake scams targeting companies’ financial data are definitely on the rise. As many as 25% of executives have shared that their organization has been a target of a financial scam at least once.

AI voice cloning scams

- Arizona mom ransom call (2023): A mother nearly paid a ransom after hearing what sounded like her sobbing daughter on the phone. It was an AI-generated voice, cloned from social media videos.

- Canada grandparents scam (2024): A couple was tricked by a scammer using their grandson’s cloned voice, asking for bail money.

This type of scamming is getting more and more popular. Here’s what one Reddit user has shared from their personal experience:

Same thing happened to my grandmother a few years ago. They actually knew my name and knew she was my grandmother (there are websites out there that list you and all your relatives). She was totally prepared to pay them too. She went against their instructions not to call my parents and I just so happened to be off of work that day so we were able to stop her.

- UK businesswoman impersonation (2019): The insurance firm Euler Hermes reported a case where fraudsters used AI voice cloning to trick the CEO of a UK subsidiary of a German energy company into transferring €220,000.

The callers imitated the voice of their parent company’s CEO, claiming the funds were needed to finalize an acquisition. The money was rerouted to international accounts before anyone realized it was a scam. Experts called it one of the first verified cases of AI voice cloning used in a targeted corporate fraud.

Then, many more similar voice scams happened, both to businesses and individuals. For example, in California, a man lost his savings after scammers used his son’s cloned voice to claim he was in trouble and needed bail money.

Political deepfakes and misinformation

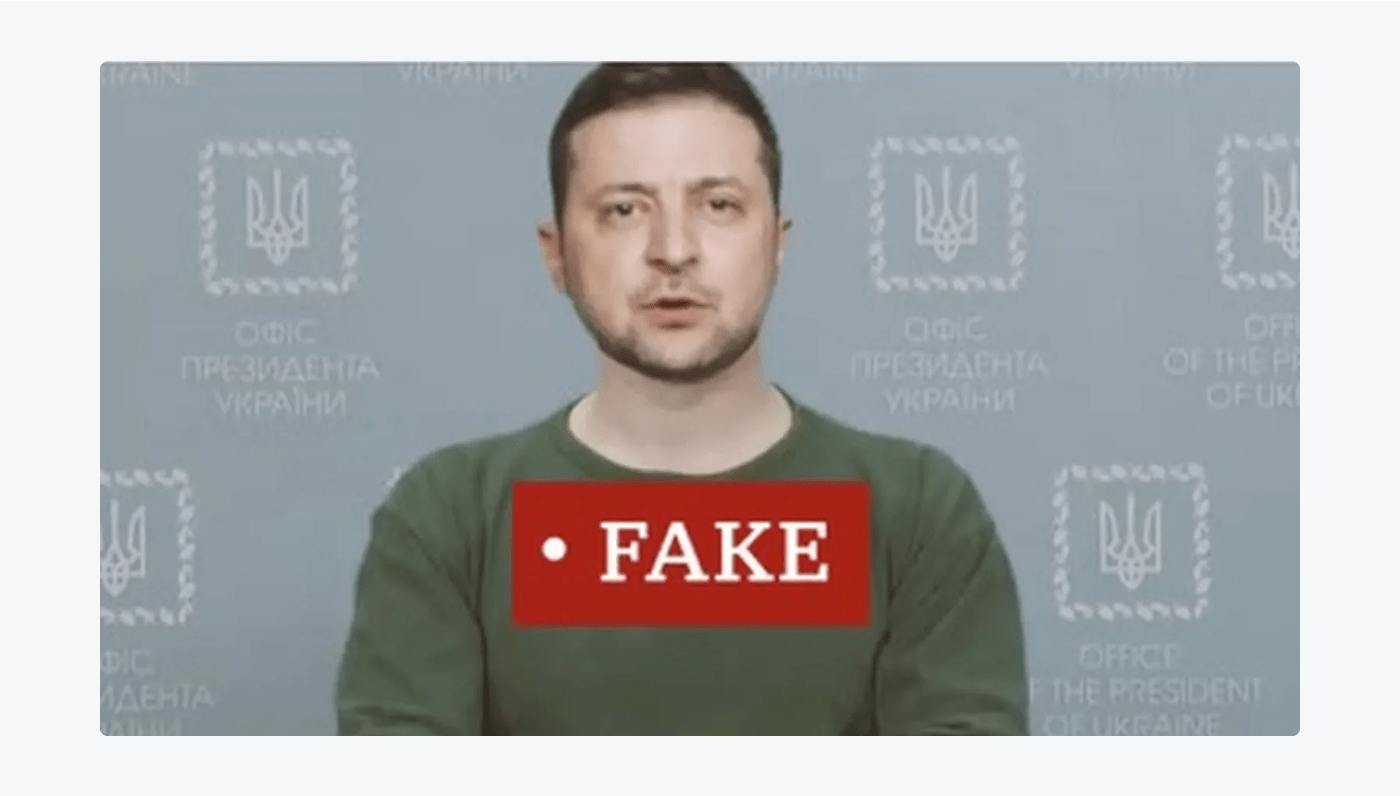

- Zelensky deepfake (2022): During the war in Ukraine, a deepfake of President Zelensky appeared online. It showed him in military uniform, standing at a podium, and seemingly calling on Ukrainian troops to lay down their weapons and surrender to Russian forces.

The video was shared across social media and even briefly aired on a hacked Ukrainian news site. Although the quality of the deepfake was low by today’s standards (his voice and lip sync were clearly off), it still caused confusion before being debunked by Ukrainian officials. The government released a real video of Zelensky, in the same attire, urging citizens to ignore the fake. It marked one of the first major instances of a deepfake used in psychological warfare.

- Biden robocall (2024): Just ahead of the New Hampshire Democratic primary, thousands of voters received a robocall featuring what sounded like President Joe Biden urging them not to vote.

The voice, calm and familiar, said that participating in the primary would only “help Republicans” and suggested staying home as a show of unity. The call used a high-quality AI-generated version of Biden’s voice and prompted immediate concern among election officials. Investigations were launched into potential voter suppression and election interference. Experts later confirmed the audio was a deepfake, and state officials labeled it a “dangerous example of AI-powered disinformation targeting democratic processes.”

Election deepfakes are becoming a real reason for worry. In fact, so far, as many as 38 countries have faced election-related deepfake occurrences. Out of the countries that held elections from 2023 onwards, 33 out of 87 had election-related deepfake incidents.

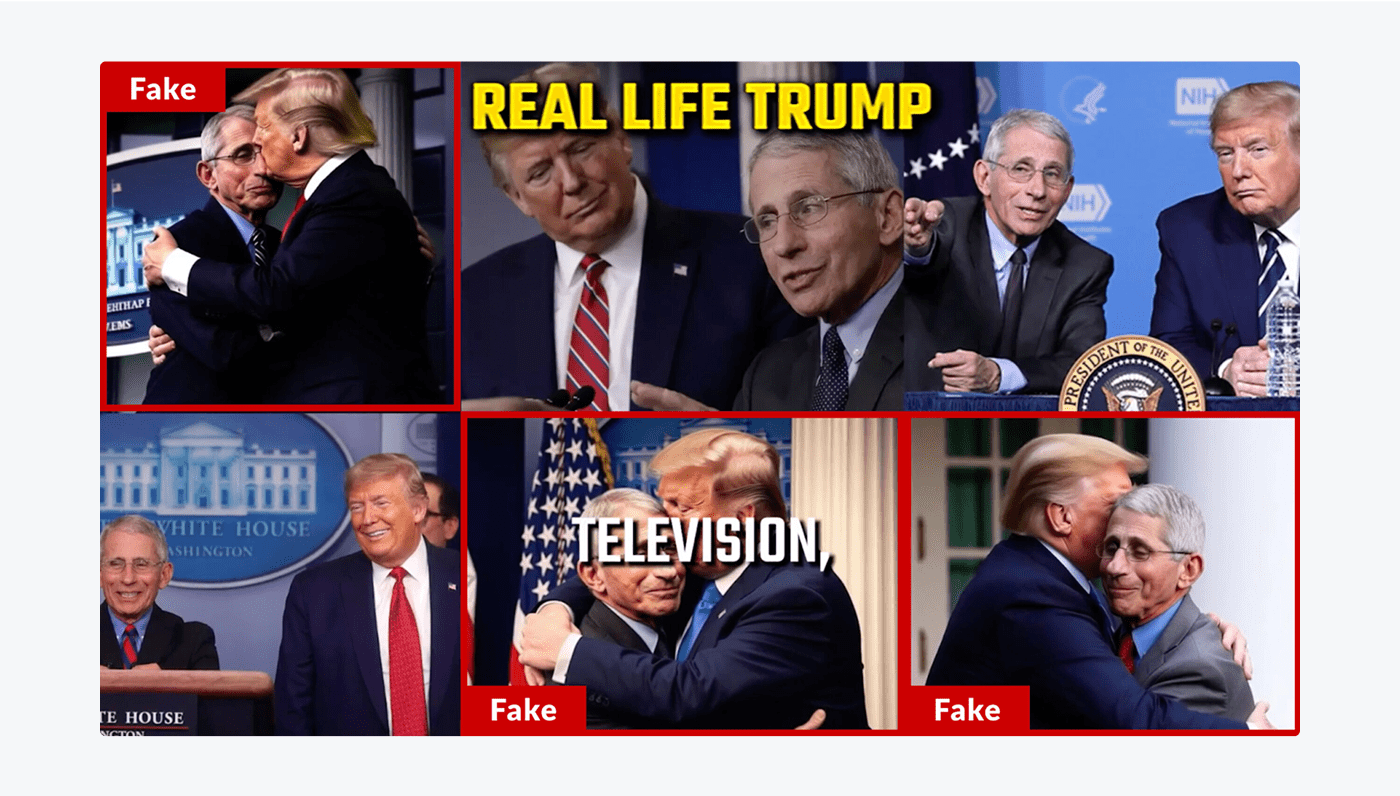

- DeSantis campaign uses fake Trump photos (2023): During the 2023 Republican primary race, Ron DeSantis’ campaign released a series of attack ads on social media, some of which included AI-generated images of Donald Trump hugging Dr. Anthony Fauci.

The intent was clear: to visually associate Trump with Fauci and criticize his handling of the COVID-19 pandemic. The images, while not explicitly labeled as AI-generated, were convincing enough to be mistaken for real photographs by many viewers.

Political analysts and journalists quickly flagged the images as manipulated, sparking widespread debate over the ethics of using deepfakes in political messaging. Critics warned that these tactics could open the door to a dangerous normalization of synthetic media in campaigns, where truth becomes optional and perception is easily engineered.

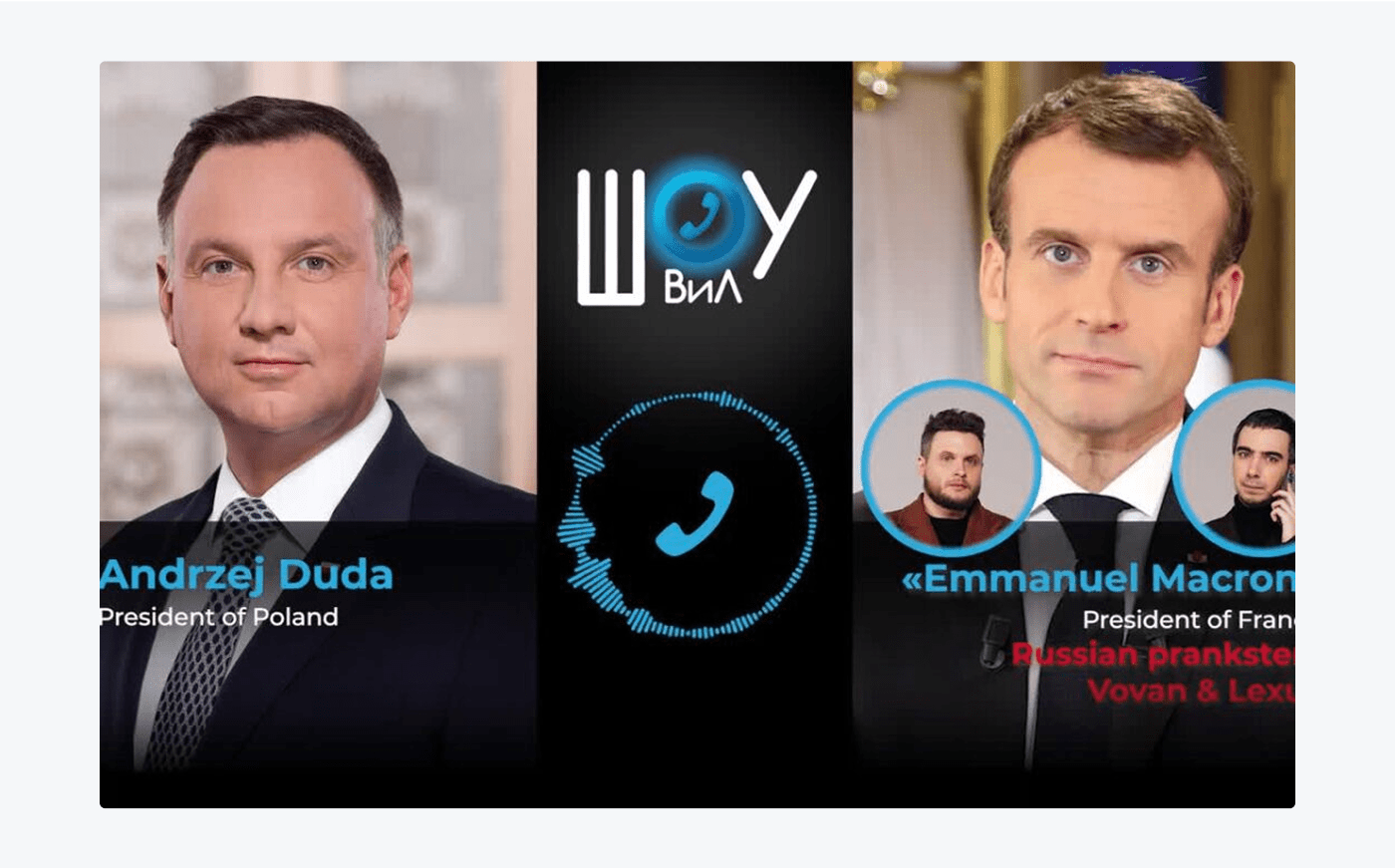

- Fake Macron call (2022): In the aftermath of a missile strike on Polish territory in 2022, Polish President, Andrzej Duda, spent over seven minutes on a call with someone he believed to be French President, Emmanuel Macron.

But, it was actually a deepfake voice impersonation by Russian pranksters Vovan and Lexus. The duo, known for targeting world leaders, engaged Duda in a serious conversation about the missile’s origin, potential NATO responses, and comments from other global leaders. Duda disclosed sensitive diplomatic positions during the call before growing suspicious and abruptly ending it.

A recording of the conversation was later posted online, prompting Duda’s office to confirm a hoax had occurred. This marked the second time Duda had been duped by the same pranksters.

- Trump Shares Deepfake Arrest Video of Obama (2025): In July 2025, Donald Trump posted a deepfake video showing Barack Obama being arrested and jailed, complete with handcuffs and a prison jumpsuit, soundtracked by “YMCA.”

The clip, which blended AI-generated visuals with real 2016 Oval Office footage, was posted in response to conspiracy claims by DNI Tulsi Gabbard about an Obama-led “coup.” Critics slammed the video as politically dangerous misinformation, warning that it blurred the line between satire and deliberate deception.

Celebrity deepfakes and commercial scams

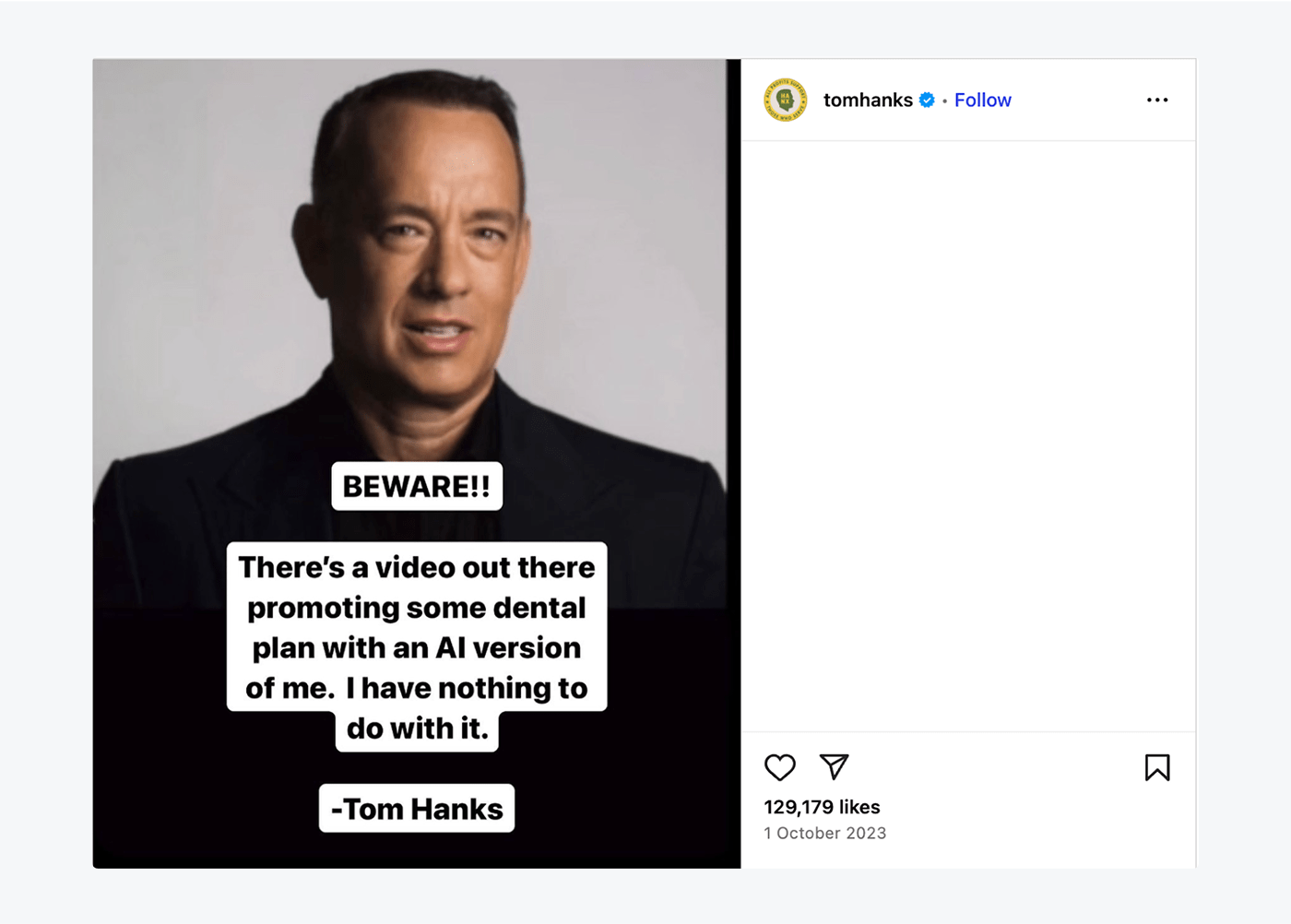

- Tom Hanks dental ad (2023): In October 2023, a video circulated online featuring what appeared to be actor Tom Hanks endorsing a dental insurance plan.

The footage showed a realistic likeness of Hanks speaking directly to the camera in a style consistent with other celebrity testimonials. However, the actor quickly took to Instagram to warn his fans: “Beware!! There’s a video out there promoting some dental plan with an AI version of me. I have nothing to do with it.” The deepfake had been created without Hanks’ consent using generative AI, sparking outrage from fans and experts alike.

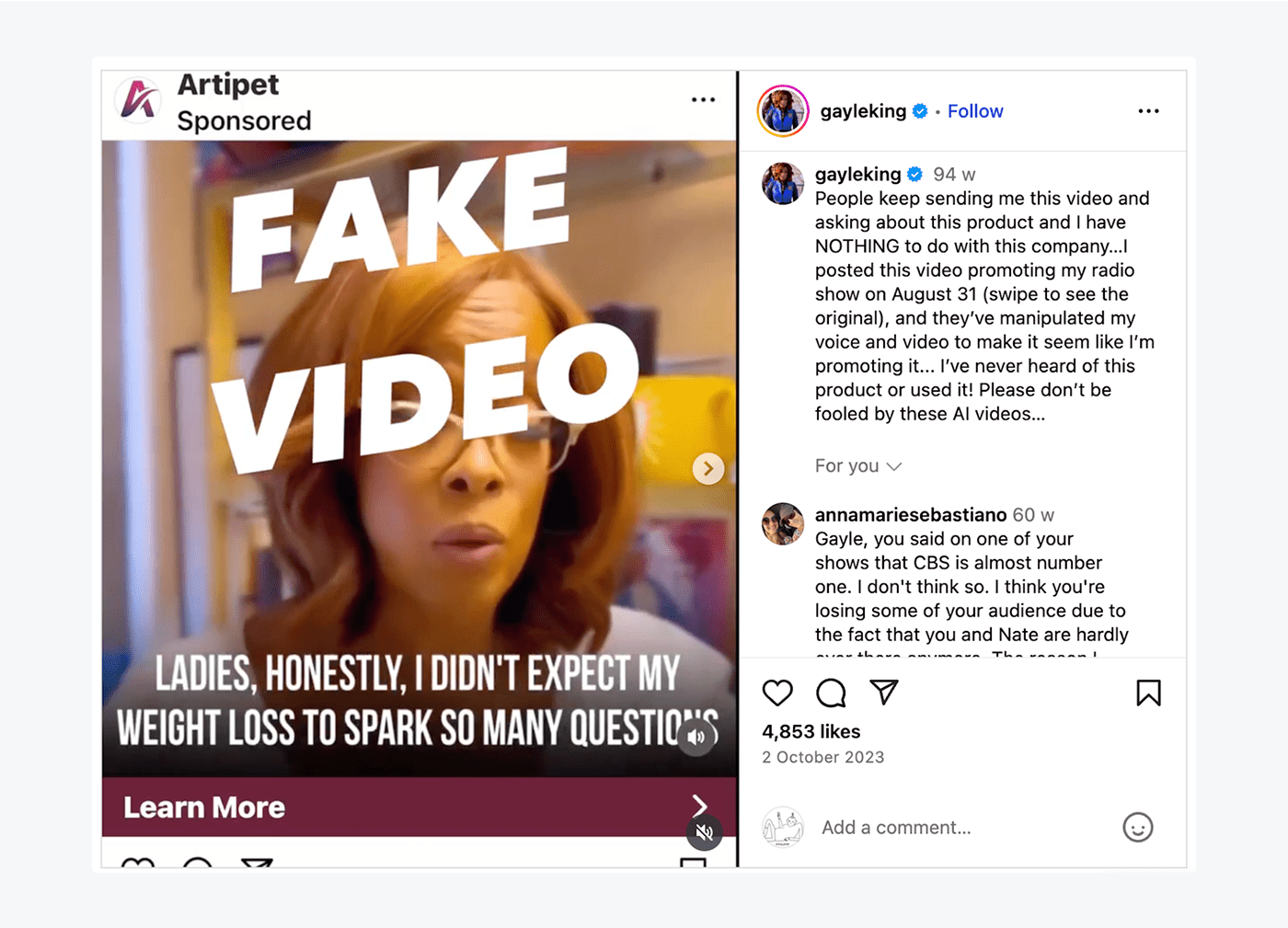

- Gayle King weight loss ad (2023): In late 2023, CBS anchor Gayle King alerted her followers to a deceptive online ad that used a deepfake version of her likeness to promote a bogus weight loss product.

The AI-generated video featured a convincing replica of King endorsing pills she had never heard of, let alone approved. She publicly denounced the ad on Instagram, calling it “fraudulent” and warning followers not to fall for it. The deepfake not only mimicked her face and voice, but also inserted fake testimonials and doctored quotes.

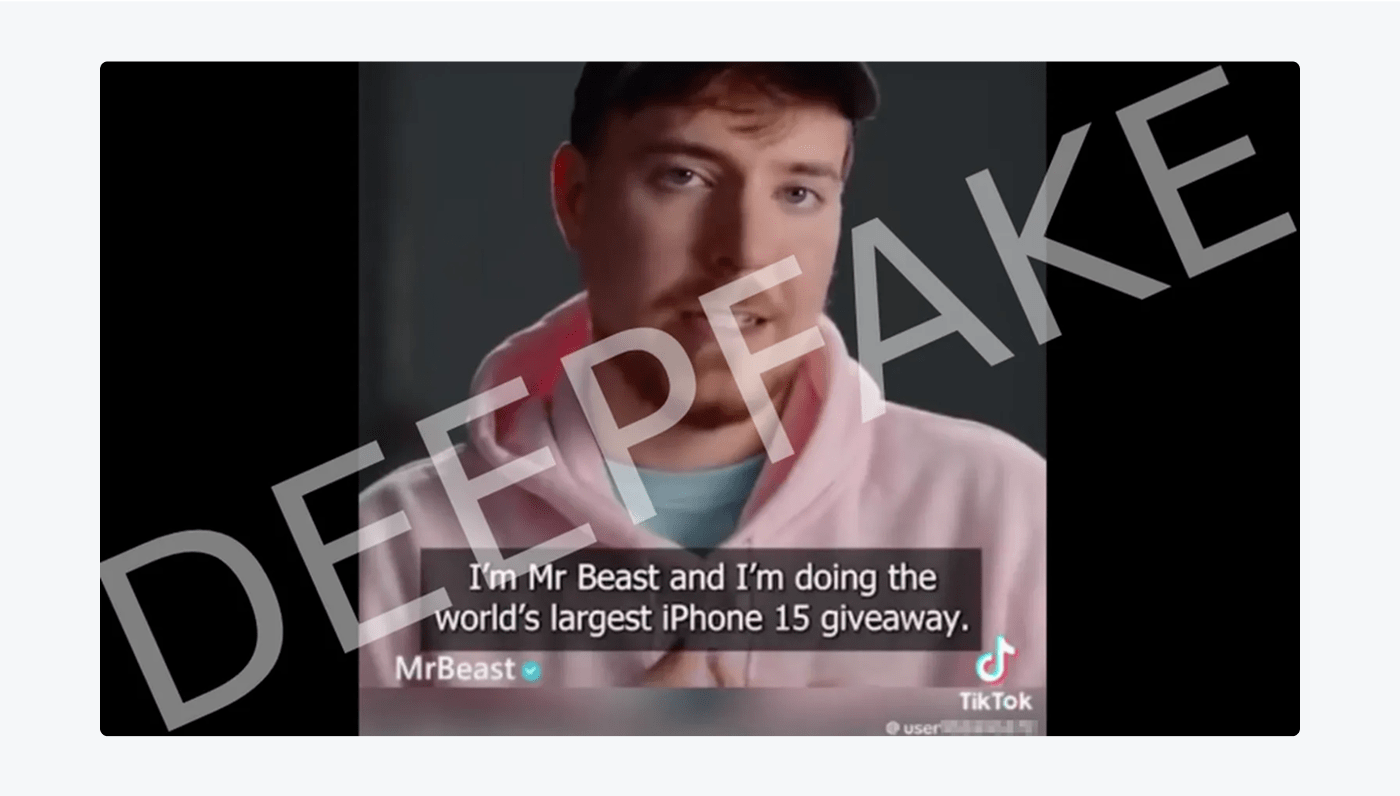

- MrBeast iPhone giveaway (2023): In 2023, a highly convincing deepfake video of YouTube star MrBeast began circulating on TikTok, in which he appeared to announce a promotional offer for iPhones priced at just $2.

The video mimicked MrBeast’s signature delivery style and visual branding so accurately that many users believed it was legitimate. Scammers used the clip to direct viewers to phishing websites designed to steal credit card details under the guise of claiming their “discounted” iPhone. MrBeast quickly responded on social media, clarifying that the video was fake and urging fans to be cautious.

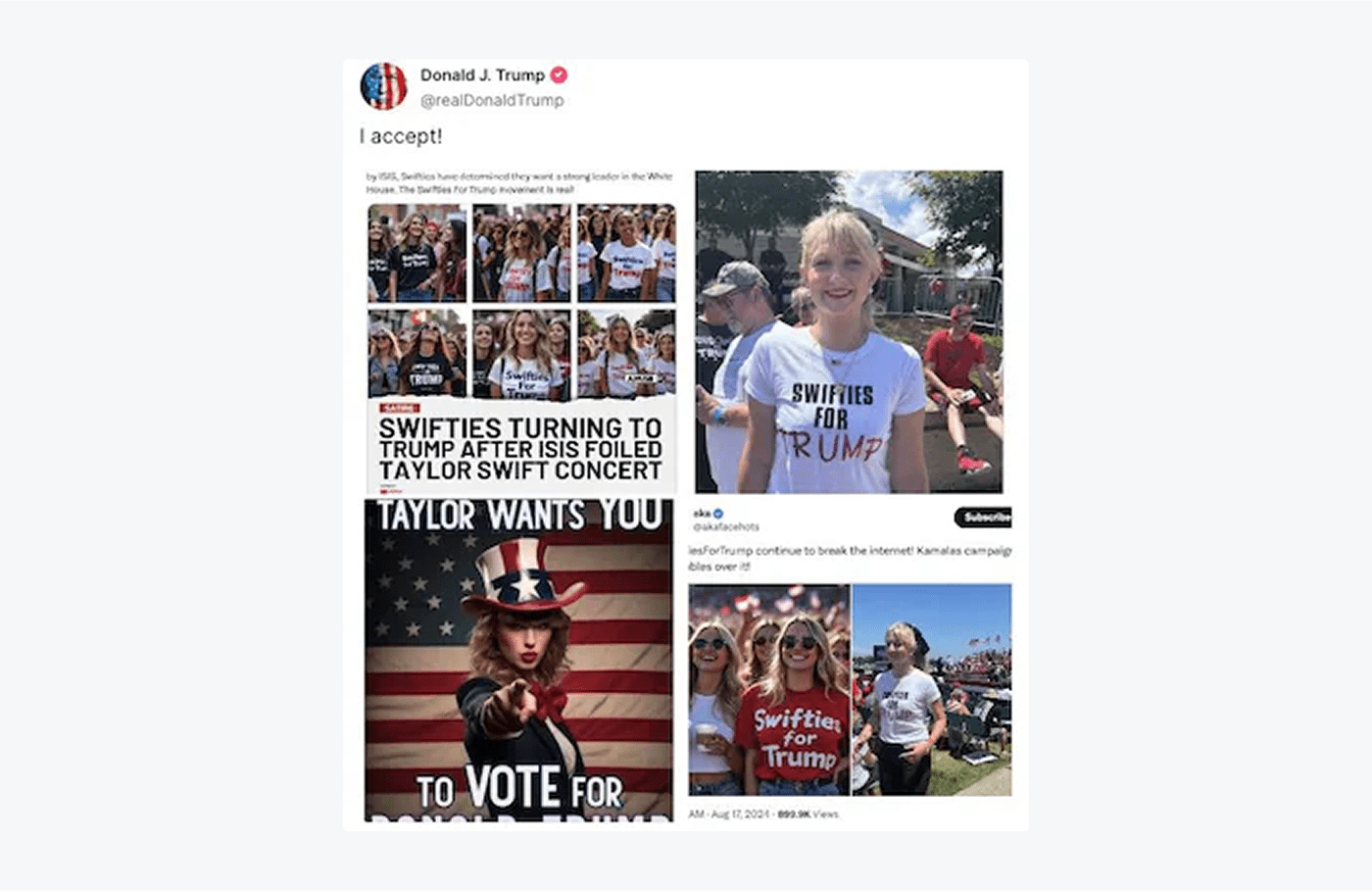

- Taylor Swift fake endorsements (2024): Before the 2024 election, AI-generated images and videos of Taylor Swift and her fans began circulating online, falsely portraying her as a supporter of Donald Trump’s re-election campaign.

The deepfakes showed her and her fans wearing Trump-themed merchandise and delivering political endorsements, despite the singer never having made such statements publicly. Later, Taylor Swift endorsed Kamala Harris on her official Instagram account.

Viral AI memes and image fakes

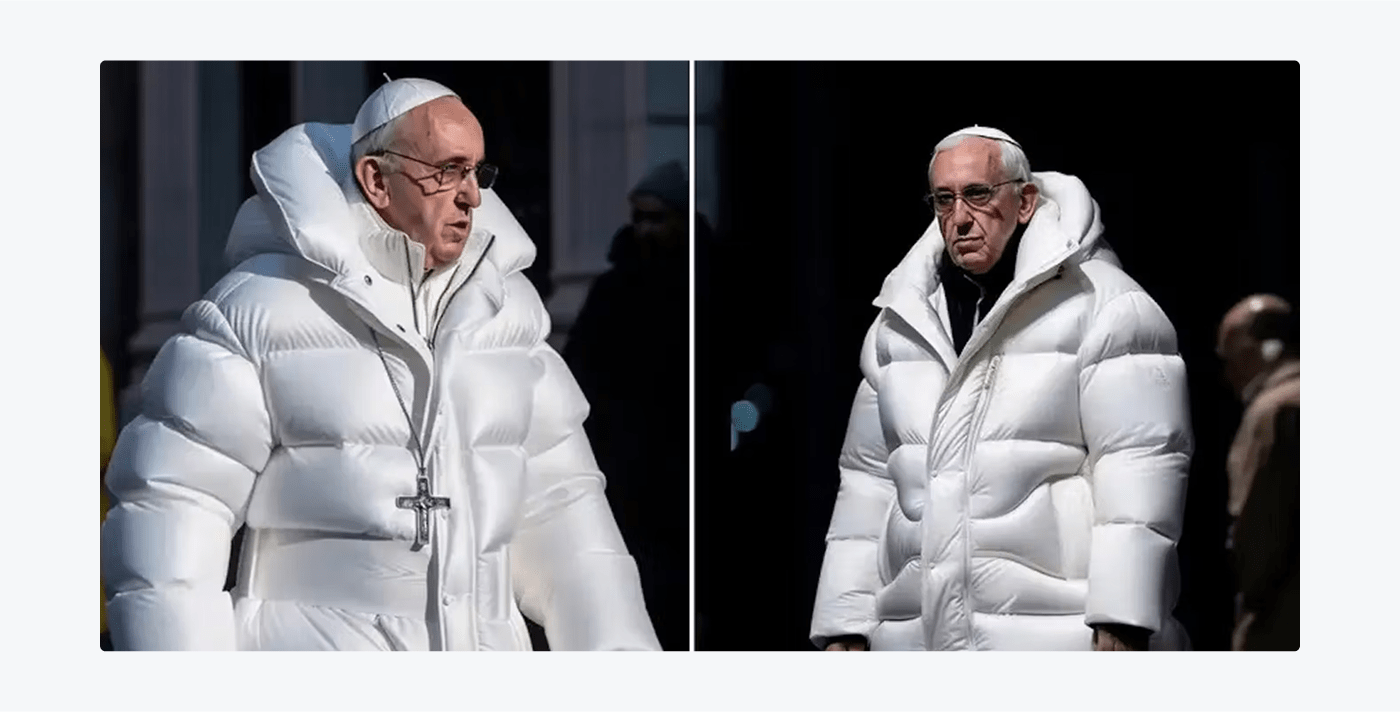

- Pope in a puffer jacket (2023): An AI-generated image of the late Pope Francis in a white puffer coat fooled millions online, including celebrities.

The photo, which showed the Pope striding confidently in an oversized Balenciaga-style jacket, was created using the AI tool Midjourney and went viral on Twitter and Reddit. Many users believed it was real, praising the Pope’s unexpected fashion sense before learning it was fake.

The incident marked one of the first major cases of an AI-generated image going truly viral. It prompted widespread discussion about how easily visual misinformation can blend into mainstream social media.

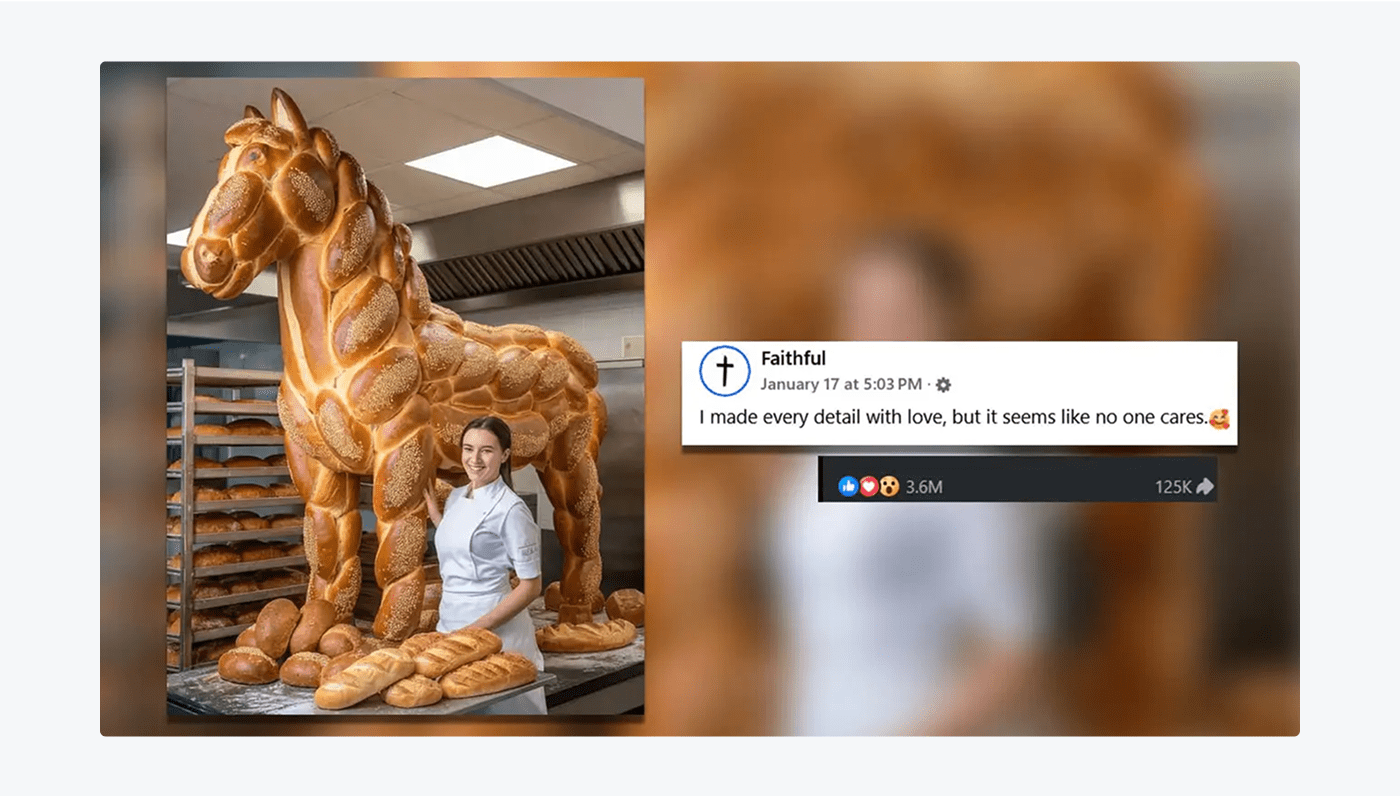

- Facebook’s AI craft flood: Older adults shared surreal AI-generated images of giant crocheted cats, bread horses, and fake DIY crafts, believing them to be real. Such posts generated tons of reactions and comments, with many people taking them quite seriously.

Academic and media hoaxes

- ChatGPT lawyer case (2023): A New York lawyer representing a client in a personal injury lawsuit relied on ChatGPT to help draft a court filing, only to discover that the AI had fabricated six entirely fictional case citations.

The lawyer, Steven A. Schwartz, submitted the brief in federal court without verifying the references, which included non-existent precedents and opinions attributed to real judges. When opposing counsel raised concerns, the court launched an inquiry and demanded evidence of the sources. It quickly became clear that the cited cases did not exist, prompting a formal hearing. Schwartz admitted he had used ChatGPT, unaware that it could generate convincing but false information, a phenomenon known as AI hallucination.

- Texas professor accuses entire class (2023): A professor used ChatGPT to check essays and wrongly accused students of cheating after the AI falsely claimed it had written their work.

- The Irish Times hoax (2023): In May 2023, The Irish Times published a provocative opinion piece criticizing young Irish women for their use of fake tan, sparking heated debate online.

The article, written under the name “Ava,” claimed that the trend perpetuated cultural appropriation and unrealistic beauty standards. However, internet detectives soon uncovered that the author did not exist, and the piece had been largely written by ChatGPT. The accompanying profile photo was also AI-generated.

After the revelation, The Irish Times issued an apology and removed the article, admitting it had been deceived by a fake submission and pledging to strengthen its editorial screening processes.

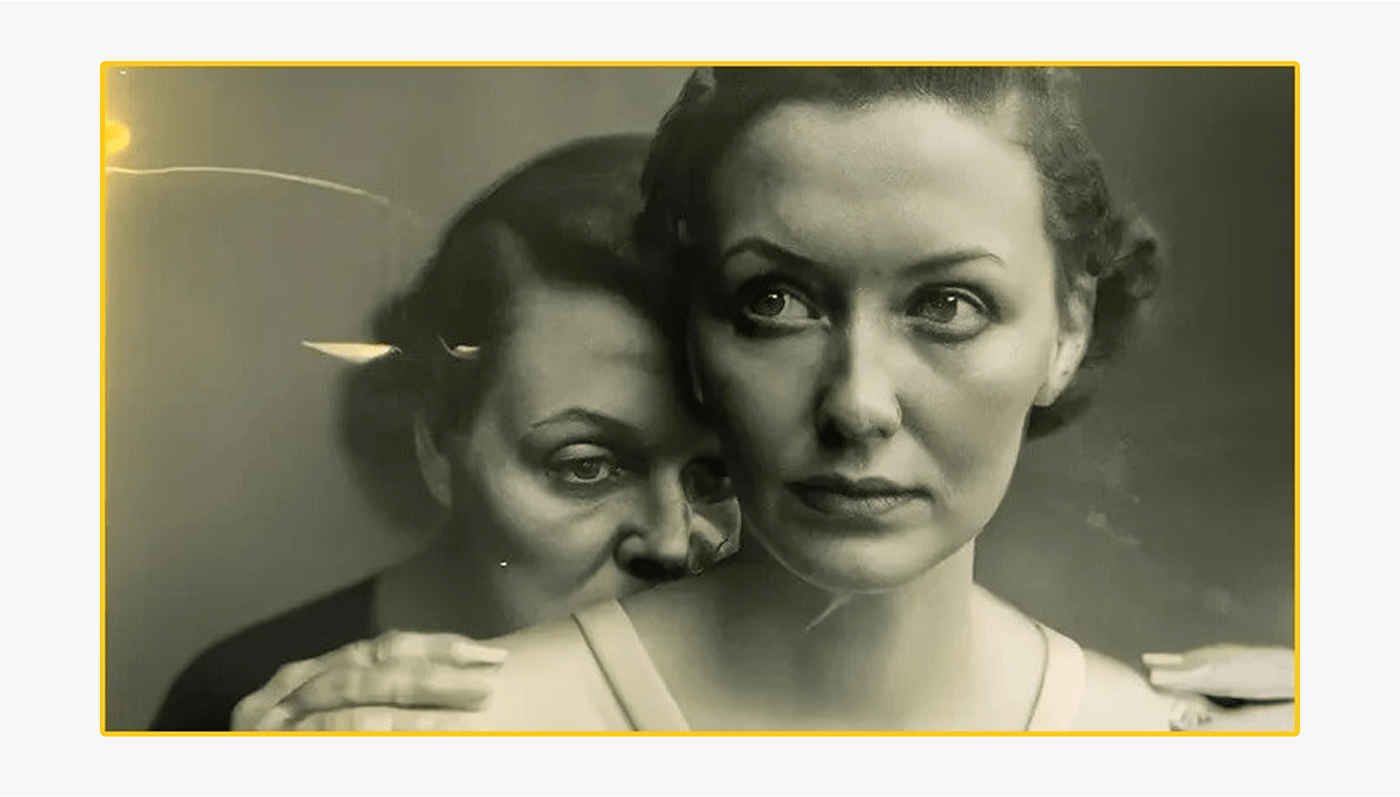

- AI image wins photography prize (2023): In 2023, German artist Boris Eldagsen submitted a striking black-and-white portrait titled The Electrician to the prestigious Sony World Photography Awards. And he won!

The haunting image, featuring a surreal, vintage-style scene of two women from different eras, was praised for its originality and emotional depth. But after receiving the award, Eldagsen revealed that the image had been created entirely using AI, not a camera. He declined the prize, stating that he had entered the contest to spark a conversation about the future of photography.

Financial market manipulation

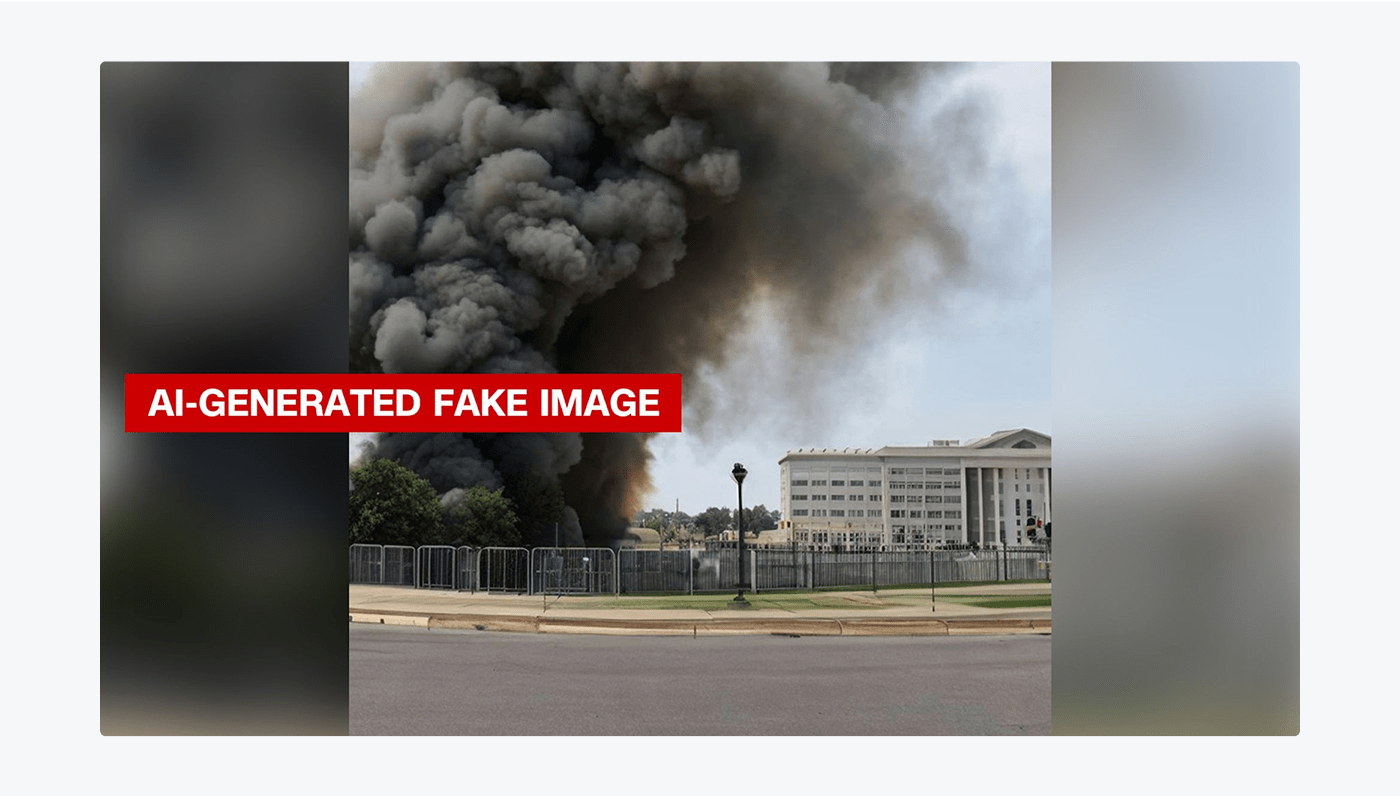

- Pentagon explosion image (2023): In May 2023, an AI-generated image showing a large explosion near the Pentagon went viral on social media, shared by several verified accounts, including one posing as a major news outlet.

The fake image triggered brief panic, and U.S. stock markets dipped momentarily before officials confirmed there had been no incident. The episode highlighted how fast synthetic content can influence public perception and even financial markets before fact-checkers and authorities have time to respond.

- Crypto coin hype scam (2024): A deepfake of Elon Musk promoting a new coin on X (Twitter) sent the price soaring before being exposed as a fake.

The video was so realistic that it briefly caused the coin’s value to skyrocket, with thousands of users rushing to invest before the clip was debunked. Once exposed as a fake, the token’s value plummeted leaving many out of pocket and reigniting concerns about market manipulation through AI-generated celebrity endorsements.

Conclusion: navigating a synthetic future

From cloned voices and fake video calls to manipulated political narratives and celebrity scams, AI has already proven it can outwit even the most skeptical among us. As the technology grows more sophisticated, so do the ways it can be used for deception.

Our research shows that people are confident in their ability to detect fake content, yet the growing list of real-world examples suggests otherwise. Deepfakes and AI-generated hoaxes are not just fringe phenomena; they’re shaping public opinion, swaying markets, and affecting lives in tangible ways.

But this isn’t about fearing AI. It’s about learning to navigate its double-edged potential. With critical thinking, better awareness, and the right tools, we can enjoy the benefits of generative technology while staying a step ahead of the deception it can enable.

Because in the age of synthetic media, one question matters more than ever: Do you really know what you’re looking at?

Methodology

For this study about AI scams and deepfakes, we collected answers from 1000 respondents. We used Amazon Mechanical Turk.

The respondents had to answer 12 questions, the majority of which were multiple choice. The survey had an attention check question.

Additional quotes were obtained through HARO (Help a Reporter Out) and Reddit.

Sources

- Deepfake fraud caused financial losses nearing $900 million

- Story of how an AI-generated Polish politician came to our coding interview.

- How to Protect Your Business From North Korean IT Worker Scams

- Finance worker pays out $25 million after video call with deepfake ‘chief financial officer’

- US mother gets call from ‘kidnapped daughter’ – but it’s really an AI scam

- Her grandson’s voice said he was under arrest. This senior was almost scammed with suspected AI voice cloning

- Adversarial Misuse of Generative AI

- A Voice Deepfake Was Used To Scam A CEO Out Of $243,000

- Deepfake video of Zelenskyy could be ‘tip of the iceberg’ in info war, experts warn

- Fake Biden robocall tells voters to skip New Hampshire primary election

- DeSantis campaign posts fake images of Trump hugging Fauci in social media video

- Polish president duped by prankster pretending to be Macron in call after missile strike

- Trump’s Deepfake of Obama Is Meant to Change the Subject

- Tom Hanks says AI version of him used in dental plan ad without his consent

- Gayle King slams AI advert that uses manipulated video of her

- Is MrBeast Really Giving Away 10,000 iPhone 15 Pros On TikTok? Here’s What You Need To Know

- Interview question exposes North Korean infiltration in remote hiring

- Trump falsely implies Taylor Swift endorses him

- Facebook’s AI-Generated Spam Problem Is Worse Than You Realize

- Two US lawyers fined for submitting fake court citations from ChatGPT

- Global map: election-related deepfakes reached 3.8B people

- Fake photos of Pope Francis in a puffer jacket go viral, highlighting the power and peril of AI

- Texas professor flunked whole class after ChatGPT wrongly claimed it wrote their papers

- The ‘Irish Times’ mistakenly publishes fake article written by AI

- Sony World Photography Award 2023: Winner refuses award after revealing AI creation

- Fake viral images of an explosion at the Pentagon were probably created by AI

- Elon Musk Impersonated in Suspected Quantum AI Crypto Fraud